The rise of information pathogens

and the merciless battle to infect our minds

How much should we think about the role of information in society?

Background:

Headlines after headlines. The US Department of Energy assesses a lableak likely with low confidence, the Wall Street Journal blasts out. The FBI director is even moderately confident, he assured in widely watched press conferences. Various US senate committees get ready to hold show trials. Many commentators claim vindication based on sensationalist headlines, not data.

Then another alleged bombshell: An animal started the pandemic. This headline referred to a recent presentation of new evidence for an animal origin of the pandemic to the WHO’s SAGO group. Of course, the story as well had to hit the news before scientists could make their results accessible to the wider public. It is a great scoop for a journalist to make, given the political controversy and interest around the origin of Covid. Respectable outlets, from the New York Times to Science magazine, jump on the bandwagon. Hours later, the WHO gave a sassy press conference scolding China about transparency, without disclosing data.

I believe the last few weeks have been a mistake. The latest media rush to break the news before scientific data are publicly available might do more harm than good, to science as well as researchers in China, who swiftly removed data once international attention hit. The opinion-heavy coverage from Wall Street Journal to the Atlantic might even be worse for the public.

Currently, the details behind both scoops are still hazy, information spreaders did not seem to have consulted with the intelligence agents/researchers performing the work.

Subsequently, multiple scientists involved in the latest WHO presentation have expressed frustration with how their new analytical work is being taken to the public before it is even completed.

“There is genetic evidence of multiple susceptible species,” […] “We didn’t intend to make this public prior to completing our analysis… [and] we want to make sure we’re confident in our analyses before releasing [it]. But the information is important.” — Prof. Angela Rasmussen to The Telegraph

[To journalists] We were not planning to communicate results before our report was finished. Finishing the report is my current priority. I won’t give interviews before the report is published. Meanwhile, you can find background information in this article — Flo Debarré on Twitter

One researcher involved in the analysis even told me in confidence that they only commented on the work because they felt there was a danger plainly false stuff would be put out by the media if not put in context.

This media rush about a scientific presentation certainly raised eyebrows and ruffled some feathers, not only with scientists. Lableak advocates cried foul, claiming this is a PR stunt from China, or an organized US media campaign to cover up a lab leak. They are wrong about the latter, but not necessarily about being upset about how it played out. After more than three years, it is hard to make an argument for the constantly breathless coverage surrounding the origin topic, especially the 24/7 baseless speculations surrounding a lableak. Media coverage that emphasizes facts, context, and accuracy over speed would certainly be preferable.

But I feel like that cat fell out of the bag a long time ago. The attention economy rewards being first, not being right. On any topic.

As for the recent ‘news’, more details & scientific results are expected to come along the proper scientific channels in the next days. It would be good to wait until then to form an opinion. At least with the WHO, data will follow. If the DoE intends to ever come forward with any information is unclear.

However, this whole saga of the last two weeks offers me the chance to talk a little bit about the role of media manipulations in shaping public understanding of scientific issues. Also, we need to talk about novel threat that few seem to be aware of.

So let’s do that in the meantime, cool?

Chapter 1: Information pathogens

What is the causative agent of the infodemic? — George Gao, ChinaCDC Weekly

Information is not what it used to be. Technological disruption and the attention economy have led to the commodification of information into a new type of digital product that we exchange for entertainment, services, profit, or power.

This new product is pretty unique as a commodity because it has the ability to shape our perception of reality, unlike most other products we consume (Drugs would be an exception; and fittingly, certain types of information can be addictive too).

Information products can shape our perceptions in obvious ways (e.g somebody explaining the rules of a poker game changes how we perceive it) and in less obvious ways (e.g through framing effects, illusory truth perception & an array of other mechanisms).

However, because of its perception-altering ability, information products can be also used to persuade, manipulate or control people.

This is not new. But I believe the current media coverage around the Covid origin controversy holds a valuable lesson about information we have not yet learned. Fittingly, it also has to do with something viral. Namely how information spreads online.

What the internet and the attention economy changed is how information flows through society and how we humans consume it.

Humans in general have an interesting relationship with information. We have strong preferences to seek and amplify information that either confirms our preconceived notions, provides us with opportunities for social networking, or engages us emotionally. When information is abundant, we pick and choose what we want to hear, not necessarily what we ought to hear.

This simple human preference means that certain information products (i.e a funny meme, a tweet we like, an outrageous video, or a sensationalist article) have an easier time spreading through society than their competition. This feature is called information velocity.

Information products always competed for society’s attention. Having differences in velocity is not necessarily a bad thing for information products, especially when we think about crises. Take the war in Ukraine, and how the horrible pictures of Russian atrocities in Bucha and elsewhere swept around the world, captured our attention, and led to action and endurant support. Or think about Covid, how the news of people dropping dead in Lombardia, Northern Italy, aligned countries and people in Europe to take NPI measures and save lives (Flaxman S. et al., Nature, 2020). We want information products that have high relevance to us to have high velocity.

Unfortunately, the velocity of information products is independent of what we traditionally valued about information content, i.e the relevance, accuracy, context, utility, or truthfulness of its content.

There are a few noticeable characteristics:

- Velocity is not related to the merit of information content. Misleading, false, or harmful information about an issue can have much higher velocity than competing good information on the same issue. A meme showing 2+2=5 might spread a thousand times better than a meme showing 2+2=4, for a variety of reasons, sarcasm, and irony included.

- Velocity is dependent on the environment. The same information product can differ dramatically in velocity between diverse information environments. Something that might go viral on Reddit might not go viral on Facebook, for example. More sinister, seeding information microtargeted at specific communities/echo chambers/niche audiences might spread faster and further than seeding it organically or randomly, where it might not spread at all and die out.

- Velocity is a property of information that can be optimized externally. Two identical articles can have very different velocities depending on their headline. Two identical videos can have very different velocities by just changing the thumbnail. Two identical memes can have very different velocities depending on who has written them. Two identical tweets can have very different velocities depending on the time they are first posted. There is a whole industry trying to figure these dynamics out.

Optimizing velocity comes with a big catch:

When the value of information does not matter, when the information environment gets artificially distorted, and when harmful information is recklessly optimized for spread, information products turn into information pathogens.

Information pathogens are toxic organisms that we get exposed to without seeking it, something that breaks into our attention and minds against our will.

When talking about information pathogens, I find that analogies to biology, especially virology, are quite useful.

While biological pathogens compete over space in our host bodies, information pathogens compete over space in our minds.

During the pandemic, many talked about the role of mis- or disinformation in shaping public perception. The WHO speaks of an infodemic of false and misleading information. The currently embattled George Gao from the Chinese CDC calls for infodemiology, the study of information viruses. All try to wrap their heads around why so many false rumors, about the pandemic, NPIs, vaccines, masks, and so on have sabotaged public health responses worldwide.

During times of crisis, bad information can do a lot of harm. That makes information pathogens uniquely dangerous. Information pathogens are the reason we have to talk and think about information velocity much more than we do today.

Harmful information pathogens compete with useful information products over our attention. Their velocity, not the quality of content, defines who wins.

One last virus analogy to hammer this point:

Velocity is a metric for the transmission efficacy of information given a particular content payload, its viral packaging, and its host environment. Basically the R0 of information, a measure of contagiousness.

And this is the crux of the matter: No amount of good information can win the competition over our attention if its velocity is much lower than that of information pathogens on the same or related topics.

As we have seen in the pandemic, those virus variants which spread better between humans are the ones that sweep the world, winning over their competition, overwhelming our innate and acquired defenses, and making people sick. Information pathogens are not that different, they just make people sick with false perceptions & beliefs.

Just like real viruses, viral information pathogens cripple our societies.

I think we all have a duty to thwart, contain and eliminate these pathogens. But where do they come from? And why are we so vulnerable to them?

Chapter 2: Velocity hacking

“The universe is not required to be in perfect harmony with human ambition.” — Carl Sagan

How does anybody make money in the attention economy and on social media platforms?

To be very simplistic: Through content that captures attention.

- What matters for content creators is how many people engage with it, and how likely information consumers will come back for more. This is also what those content ranking algorithms dictate that made the platform companies (or better said their shareholders) so immensely wealthy. The algorithms optimize and especially reward those content creators who steal your time, attention, and engagements most effectively so your data can be sold to the highest bidder.

Any content creator who peddles information for cash or influence (e.g marketers, influencers, even independent journalists, news outlets, or organizations) is required to also optimize information velocity to maximize profits.

Velocity hacking is the true business model of information merchants.

And while there are legitimate ways to do so (i.e through a more accessible presentation of quality content, being more relevant, timely or contextual, using inclusive language, create helpful illustrations and visualizations, utilize trustworthy amplifiers, community engagement strategies…) I believe we are all way too naive about the many illegitimate tactics and practices out there.

Trying to understand these has however become a bit of my pet interest.

I have previously written about the communication tactics of quacks, fake experts, influencers, political commentators, trolls, gurus, charlatans, contrarians, pundits, and other discourse manipulators to change public perception of scientific issues through noise pollution.

What I have not mentioned yet is that many of these actors are also pretty good velocity hackers. They have to be to make any money in the attention economy.

Again, the boundary between what is legitimate optimization of content and what is manipulative and toxic is not always easy to draw. We will focus now on a few examples from toxic media manipulators that are on the far side of the spectrum, but make no mistake, there is a lot of grey zone in between (and we will get to that in the next chapter).

Alright, let’s observe some velocity hacking in action!

Sometimes, influencers just sift through the current energy and mood of the news cycle and try to connect what is currently attracting eyeballs with their ideological pet peeves.

A true masterclass of velocity hacking comes from a partisan blogger and culture war grifter who cosplays as independent journalist on substack (his content is political commentary & election denial & rehashing conspiracy myths).

He used some video snippet from antivaxx activist RFK Junior that he clipped to set up his content, which includes the direct tagging of the two amplifiers in question, plus some storytelling elements about what the video clips shows. He got pretty good engagement on it, when suddenly Elon Musk (the white whale of amplifiers) stumbled on this clip and commented that made his tweet go viral. But that was luck, one can not always get Elon Musk to comment, you might object. True.

BUT what was not luck was his next tweet. A day later, he decides to take a snapshot of his previous tweet with Elon Musk’s comment in the picture, some yellow highlights, an a big “THREAD” announcement to double dip on the attention. Check this Matryoshka doll setup below:

That is velocity hacking, in a nutshell. Brilliantly done and rewarded with millions of views for essentially worthless commentary. The attention economy thrives on this type of content.

We humans are pretty creative when it comes to exploiting algorithmic systems and manipulating others. There is a dramatic variety of strategies, services and myths surrounding velocity hacking, because it is so incredibly lucrative. Many antivaxx activists have become multi-millionaires based on peddling conspiracy theories on substack and selling supplements.

How can society make sense of this? How do they know if something is popular because it is important, or because somebody hacked the system?

I wish I had good answers for these questions. I have not wrapped my head around it either. But let’s try to finish this chapter with some basic intuition about toxic velocity hacking tricks through my experience with the lab leak conspiracy myth.

A little case study:

Let’s see what a simple Twitter search yields when typing “lab leak min_faves:10000” (this will filter for posts including the words “lab leak” that were liked more than 10.000 times), and I do this for the week after the Wall Street Journal story went viral. Many influencers and discourse manipulators could not refrain from parachuting in on the lab leak issue and reaping in millions of views. Can we identify some common features in these viral tweets?

Now as a responsible journalist, institution, or media outlet, it might be easy to lean back, point fingers at toxic influencers, and bathe in the sun rays of moral superiority. That would be a mistake though.

How exactly do you think we got to this point?

Chapter 3: Information cascades

With the easy availability of information about other users’ choices, the Internet offers an ideal environment for informational cascades. — Duan W. et al., JSTOR, 2009

After the DoE report media coverage, there was frustration among scientists and science writers (myself included) about how evidence-free claims can flare up around the world and manipulate public conversation on the Covid origins topic.

Two weeks later, an interesting reversal of circumstances happened when the Atlantic headline’s promise of new evidence (without showing it) flared up and sweep around the world to manipulate public opinion.

Being skeptical about reports of information that contradicts one’s worldview is generally healthy.

There is however a remarkable difference in attitude between scientists and conspiracy theorists when it comes to dealing with new information.

Let’s have a quick look at how lab leak advocates reacted:

Conspiracy theorists tend to be fretful when new data emerges, especially when it does not neatly align with their entrenched worldview. Routinely, their skepticism swaps over into assuming a malicious plot, science denial, or outright reality rejection.

In contrast, scientists tend to be open to new data, actively seek it out or create it, and are motivated to find data that challenges previous assumptions.

In case you are curious, here was my reaction (which was certainly more pungent and less careful than your average scientist):

So what exactly are information cascades?

In many social and economic situations, individuals are influenced by the decisions of others. Information cascades describe a sequential series of coordinated decisions, like buying a new product.

An information cascade is generally accepted as a two-step process. For a cascade to begin an individual must encounter a scenario with a decision, typically a binary one. Second, outside factors can influence this decision (typically, through the observation of actions and their outcomes of other individuals in similar scenarios) — Wikipedia

However, with the rise of social media, information cascades as a term has become a bit broader and muddier, standing in for phenomena such as information diffusion, social persuasion, or influence.

I personally find the concept really useful for social networks because I basically see information on social media as a product, and we make the binary decision to reject or buy it in the form of liking/sharing/commenting on it. (In this model, how we feel about it doesn’t matter. The moment we engage with it, we ‘bought’ it)

The point is that we are not making our decisions to like/share/comment based on our own knowledge or desire alone, but also based on previous decisions made by others. If an influencer engages with a piece of content, his followers will see this and might be nudged to take the same decision to engage. If a piece of content has been visibly engaged with by multiple people in our social circle, our decision to engage with it becomes more rational and likely. At some point, after seeing almost everybody engaging with it, that decision to engage becomes a near certainty. Et voila, we have an information cascade.

Our participation in information cascades makes us party to its effects.

While not all modes of information diffusion are cascades, there is one feature of information cascades that is remarkable:

Information cascades restructure our social connections.

For example, viral news coverage can create political polarization by sorting social networks along political lines.

[…] information cascades can create assortative social networks, where people tend to be connected to others who are similar in some characteristics. Tweet cascades increase the similarity between connected users, as users lose ties to more dissimilar users and add new ties to similar users. — (Myers & Lescovex, Proceedings of the 23rd international conference on World wide web, 2014)

Twitter users who follow and share more polarized news coverage tend to lose social ties to users of the opposite ideology. (Tokita CK. et al., PNAS, 2021)

Information cascades both amplify and make us vulnerable to the spread of viral information pathogens.

This seems to be a unique feature of our interconnected information age already distorted by algorithmic curation, echo chambers, filter bubbles, and other mechanisms that reduce the broadness and diversity of our information landscape. This makes us more vulnerable to contagion, especially by information pathogens custom-crafted for weaknesses and biases.

Before the internet, anybody infected with an information pathogen (let’s say a conspiracy theory) would come in contact with many people who would have some immunity to it, thus reducing and containing the spread. Maybe even help the infected to get over his false belief.

Information pathogens get shaped in the diverse ecosystems of the internet. Most of them never infect more than a handful people before they fickle out. What makes them truly dangerous is when they become part of information cascades that restructure social connections.

Today, there is a specific blend of anti-establishment, anti-science gurus that have contributed dramatically to the popularization of the lab leak myth.

These conspiracy myth superspreaders created and maintained a niche audience where circulating information pathogens can breed ever-new variants over time. And just as with biological pathogens, these new variants become more adapted to their hosts and optimized for transmission, sometimes causing another wave of media and public attention.

That’s largely the reason why the boring DoE assessment update hit like a bomb, and why the breathless announcement of new raccoon dog data was received with fretful outrage.

Velocity hackers knew exactly how to spin the stories to create another information cascade for profit, on the backs of fragmented audiences and their vulnerabilities to such pathogens.

However, all of this unintentional social engineering and crafty velocity hacking comes with a prize. Once optimized and released, information pathogens are outside anyone’s control. (Also: Where is the gain of function outrage when it comes to information pathogens?)

What we currently underestimate is that the ruthless exploitation of online ecosystems can create something dangerous: The opportunity for novel information pathogens to spill over and infect our minds.

Information pathogens, just like biological viruses, develop a life of their own. They take over minds, mutate over time to become more infectious, and even chronically entrench themselves in the form of beliefs and worldviews. And while biological pathogens cause health crises, information pathogens contribute to our current epistemic crisis.

A crisis where citizens lose the ability to tell what is real and what is true.

The implications go far beyond the Covid origin controversy. It goes to the heart of our current democratic struggles. To understand this, we need one more stop on this too-long already journey:

We need to talk about information flow.

Chapter 4: Democratic backsliding

With the rise of the internet and social media, many hoped that with the power of a democratic information sphere came the power of a democratic society

Information shapes our perception of reality, it has always worked like that. For good and bad, for brilliant or horrible. There is a reason why dictators throughout history try to control information flow; by buying up or shutting down media outlets, or silencing critics. Repression, censorship, propaganda, or disinformation are tools in a kit to manipulate with information, to shape public sentiment and perceptions. It works, not perfectly, but often good enough to entrench power.

Much of the second half of the last century has been characterized by the emancipation of information from this central model. Uncoupling information from autocratic power was foundational. Think about independent newspapers, the fourth estate, private publishers, and citizens all working together to free information from its shackles and compete for the best ideas. That’s how democracies were to supposed to work, after all. At the early turn of the century, information technologies and their ability to empower and connect people was seen as a natural progression towards a more equal and just society.

Especially with the rise of the broadband internet and social media, many hoped that with the power of a democratic information sphere came the power of a democratic society. If information can flow freely in an ever-more connected world, people would see the fruits of democratic societies, take power and liberate themselves of their oppressors. That’s what we thought.

“The more freely information flows, the stronger the society becomes, because then citizens of countries around the world can hold their own governments accountable. They can begin to think for themselves.” — Barack Obama

Unfortunately, it did not turn out this way. Today, democracies are in decline worldwide and autocracies are on the rise, often empowered by social media. How the hell did that happen?

Somewhere along the way, something must have gone wrong.

The internet and social media promised to liberate information from centralized control. Elon Musk was naively advocating his vision for Twitter as a free speech absolutism zone, free of any moderation, censorship and control, and promptly the platform became hostile to democratic discourse. Today everybody can post and share information online with most of the world. But does that imply that information truly flows freely?

I believe a systemic view reveals a devastating picture. Our information sphere is currently broken, and its many asymmetries lean against the public good.

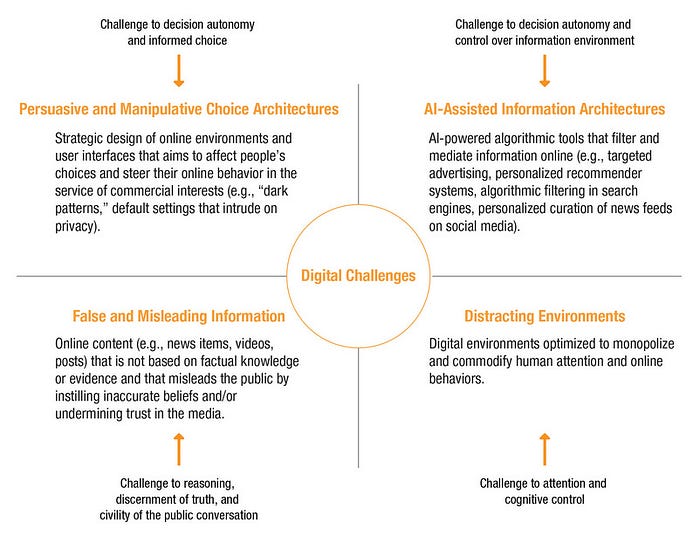

In my opinion, there are currently four major (and related) issues that obstruct free information flow:

- noise pollution can sabotage unpopular but valuable information from flowing freely

- velocity hacking creates toxic information pathogens that outcompete normal information products

- information cascades restructure social connection and change the landscape of information flow

- algorithmic & choice architectures on social media platforms manipulate information flow in service of financial interests

This is the asymmetric environment our public discourse about science (& everything else) is currently operating in

By controlling how and what information flows in our info sphere, discourse manipulators and velocity hackers (as well as social media companies) shape our perception of reality in their interest. Not in the public interest or for the public good. This is not only a problem for science and scientific issues, it poses serious challenges to our agency and a problem for democracy.

Democracy in the information age presupposes an equality of influence, yet even before our voices are drowned out by the noise on social media, or overwritten by velocity hacking information combatants, we lose our agency and decision autonomy to systems we don’t fully understand.

Maybe worst of all, these systems distract us so effectively that too many of us do not even care to understand why.

(Well, if you kept your attention and read until here, congratulations on your iron focus)

Conclusion

We live in a society exquisitely dependent on science and technology, in which hardly anyone knows anything about science and technology. — Carl Sagan

This article started with a naive question:

How much should we think about the role of information in society?

The better question would be: How could we allow ourselves not to think about it for so long?

Our new information systems are currently incredibly vulnerable to information pathogens and quite exploitable by profiteers and bad actors. While we get distracted in our emotional culture war fights or disagreements, a cottage industry of information peddlers is stirring the flames of conflict for entertainment, influence, profit or power.

In the attention economy, social media platforms became behemoths but social fabric withered. Some news outlets became dominant but diversity and journalistic standards overall declined. A few influencers got elevated to become incredibly wealthy thought leaders, gurus and prophets, but intelligent discourse has mostly left the public sphere.

In the information age, the flow information certainly seems to follow the laws of power much more than the laws of thermodynamics.

I believe the idea of free information flow in the attention economy is a false myth. A mere fiction we either tell ourselves or that is being sold to us by the currently powerful.

A fiction we have to outgrow to create a better, freer, and more democratic information sphere. We have to understand that the battleground of information is not level, but asymmetric. Information pathogens are merely one illness we have to cure on a long list of ailments, including the fragmentation, polarization, and fragility of our current democratic societies.

Democratic backsliding specificaly is complex problem that has many layers to it, but we would be naive to assume that our information sphere is not one of them.

As I have argued elsewhere, we humans need a software upgrade in the information age. We need to adapt to our new circumstances or risk falling back into darkness.

The whole point of the enlightenment, the scientific revolution, our educational and epistemic institutions was to empower individuals to find a way out of their self-imposed immaturity, to take agency over their lives, and make informed decisions.

So that part is on us, collectively.

And that hard work has just started.

Disclaimer:

There is no monetization on my articles. As always, my hope and goals are to educate and equip citizens with conceptual tools and new perspectives to make sense of the world we inhabit. This is why I do not want it locked behind any type of paywall.

I see this work as a public good that I send out into the void of the internet in hopes others will get inspired to act.

So feel free to use, share or build on top of this work, I just ask you to properly attribute (Creative Commons CC-BY-NC 4.0).

You can also follow me on social media, but I would prefer for all of us to rather spend less time on it.

Twitter: @philippmarkolin (beware: Twitter is now a hostile & anti-democratic information platform)

Mastodon: @protagonist_future@mstdn.science

Substack: https://protagonistfuture.substack.com/

Before anybody asks: So why not just make information pathogens & cascades with good information or scientific content? You know, like a self-spreading vaccine against disinformation?

I’ll say this much here: Researchers are looking into this.

However, the problem with scientific content specifically is that it has a very poor velocity that can not be optimized dramatically because science is bound by fact.

Science is also a myth buster, it often contradicts our preconceived notions, rather than confirm them. We don’t want to click on things that challenge us.

On top of that, most scientific content is either complicated, nuanced, boring, technical, jargoned, or unintuitive. It is not accessible, sexy, or easy to optimize.

All this means that scientific information flows poorly through society, and it needs help through public education and scientific outreach, as well as amplification by public broadcasting, governments, communities, and societies.

But expect more on this topic another time.

Cite this work:

Markolin P., “The rise of information pathogens”, March 21, 2023. Free direct access link: